MCP for All of Your Databases: Giving AI Tools Access to Your Data

Streamline your data science workflow

One of the most practical ways to make AI tools like Cursor or Claude genuinely useful for data teams is by connecting them directly to your databases.

Until recently, that wasn’t easy. Giving these tools access to live data or metadata required custom APIs, wrappers, or endless prompt engineering.

That’s where MCP changes everything; it standardizes how AI environments connect to external systems like Postgres, MySQL, BigQuery, and MongoDB.

In this guide, I’ll show you how to quickly set up and configure an MCP server for your database so you can query and analyze data directly from your AI workspace.

This will be a technical walkthrough, but it’s much simpler than you might expect.

Let’s get to it!

First, what is MCP?

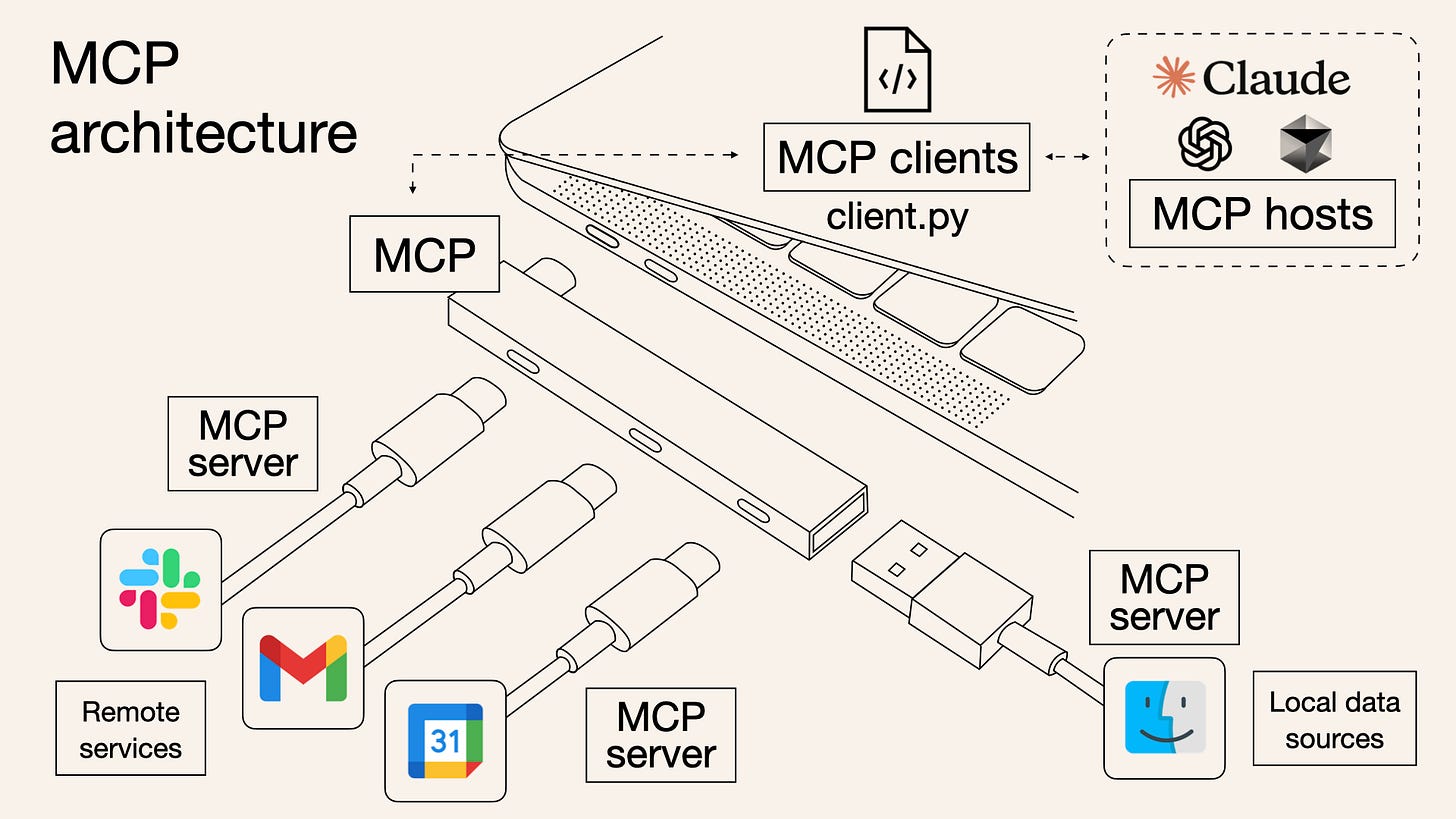

At its core, MCP (Model Context Protocol) is a framework that standardizes how AI agents connect to external systems such as databases, APIs, file stores, or any tool that holds useful context.

You can think of it as a structured alternative to APIs, but designed specifically for AI workflows. Instead of every tool exposing a unique REST endpoint and every agent writing custom integration code, MCP defines a consistent protocol for discovery, messaging, and execution.

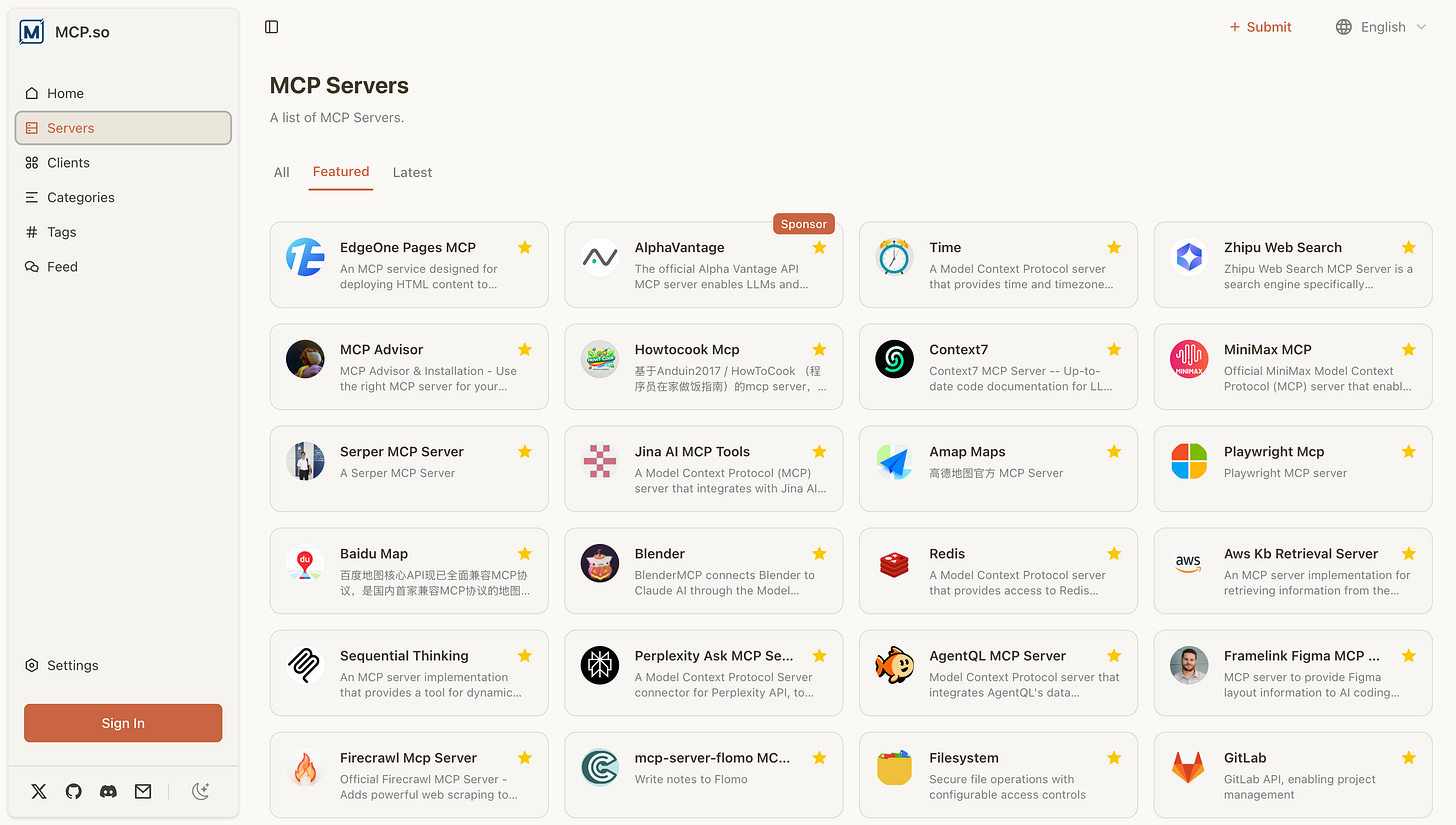

This is why there now exist so many MCP server directory sites where you can find MCP servers that others have built for different purposes, and that you can easily download and use.

Although you can probably find an MCP server for your database in one of these directories, I’m going to show you a better alternative…

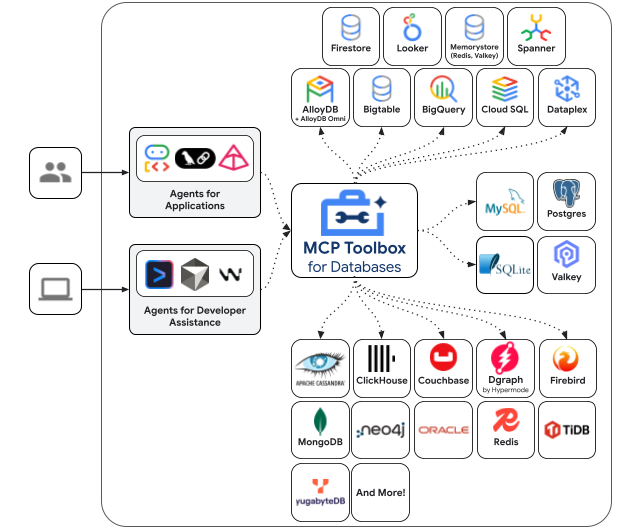

MCP Toolbox for Databases

MCP Toolbox for Databases is an open-source framework developed by Google that automatically spins up an MCP server for your database (Postgres, MySQL, BigQuery, MongoDB, etc.) using a short YAML configuration.

The main advantage of using this tool is that it already takes care of most of the boilerplate code needed to expose your database through MCP. It comes with several built-in tools that cover the most common use cases out of the box:

list_tables: Lists all tables in the connected database.

execute_sql: Executes SQL queries and returns results in a structured format.

list_active_queries: Displays currently running queries for monitoring and debugging.

…and many more, depending on the database type.

And if you need additional functionality, you can easily define your own tools by adding just a few lines to a tools.yaml file. This gives you the flexibility to extend or override the default behavior without writing server code from scratch.

Let me show you a few examples of how you can start using it…

Setting up a Postgres MCP server with Cursor AI

Installing this toolbox on macOS or Linux is as easy as doing:

brew install mcp-toolboxFor additional instructions, check out the documentation.

Let’s say you have a Postgres database and want to connect it to Cursor AI so you can read data, write queries, and run them without ever leaving your workspace

Here is all it takes to do that:

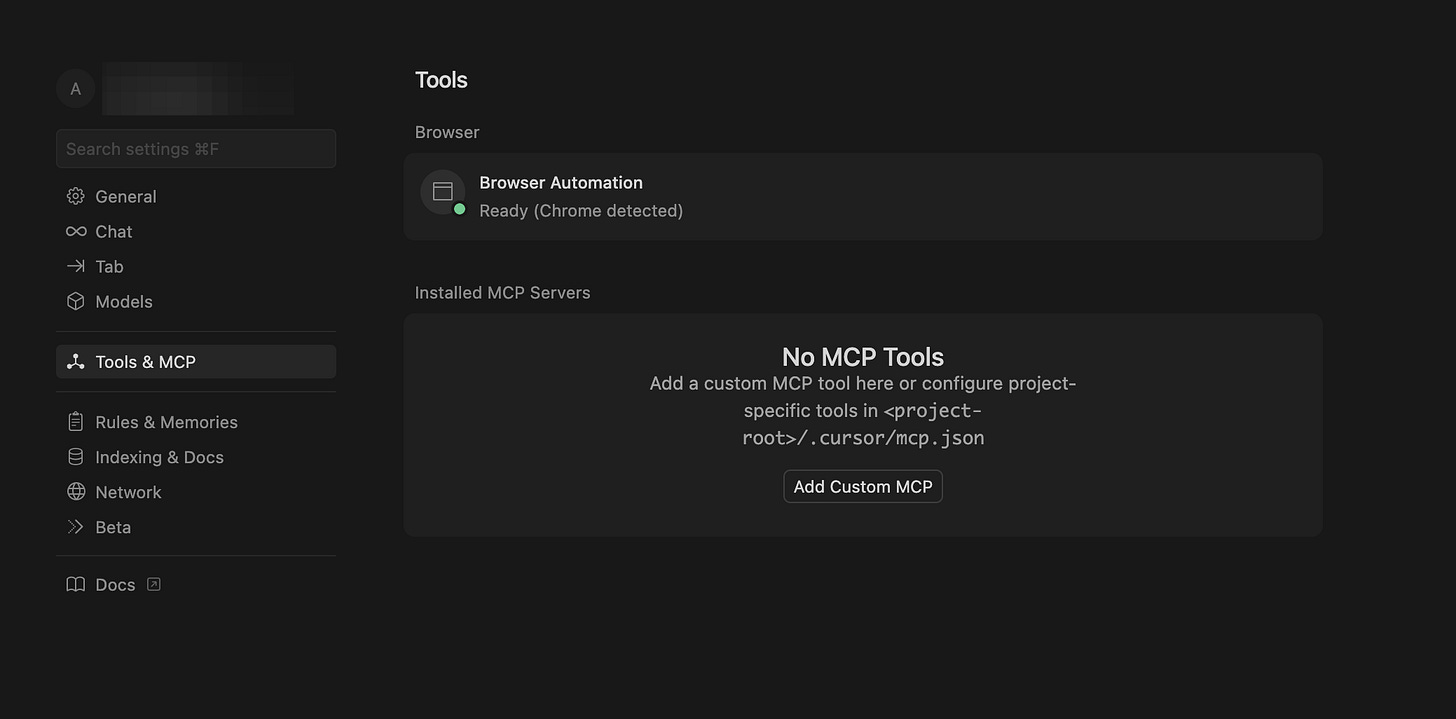

Step 1: Click on “Add Custom MCP“ in the Tools & MCP tab of your Settings

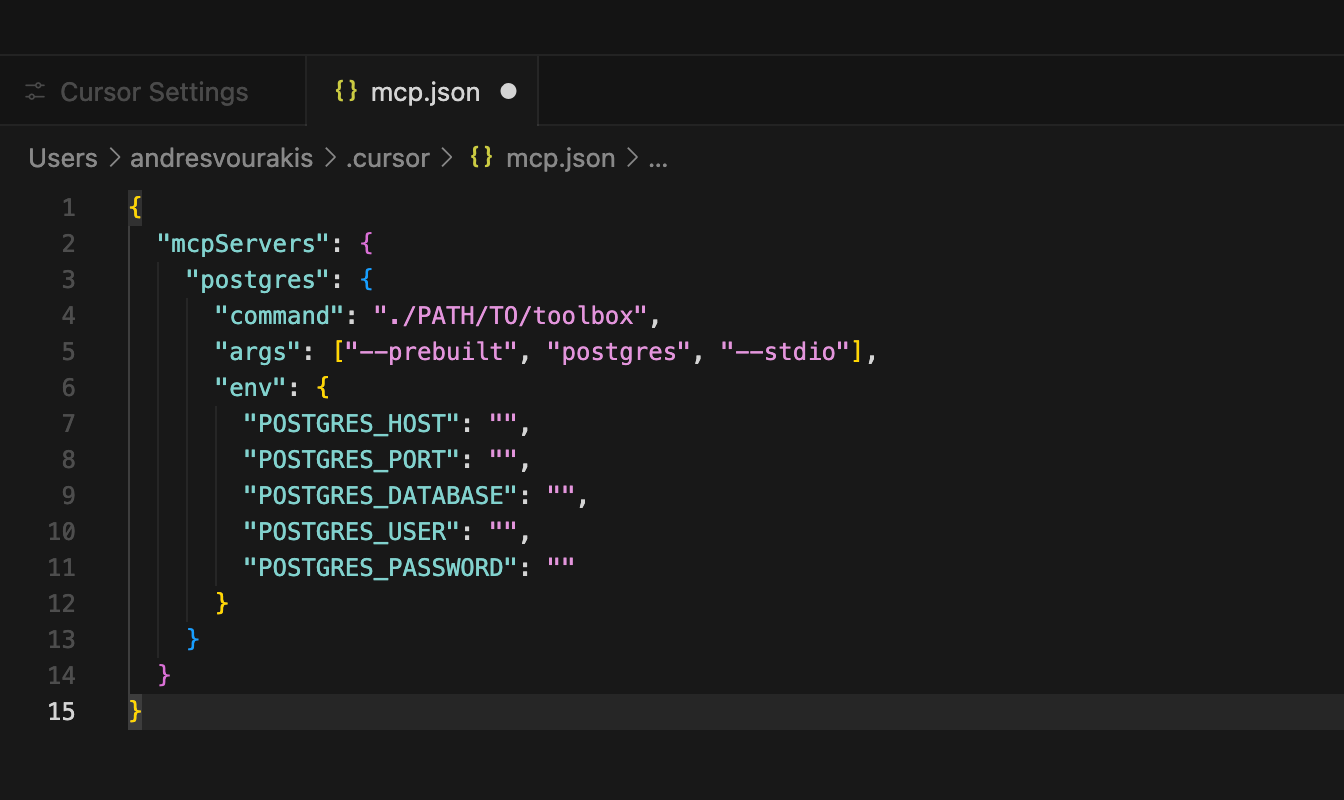

Step 2: Add your Cursor MCP configuration

This mcp.json file tells Cursor which MCP servers to start (or connect to) when it launches. In this example, all you need to do is replace the “command“ key with the path for toolbox, and fill in your database credentials.

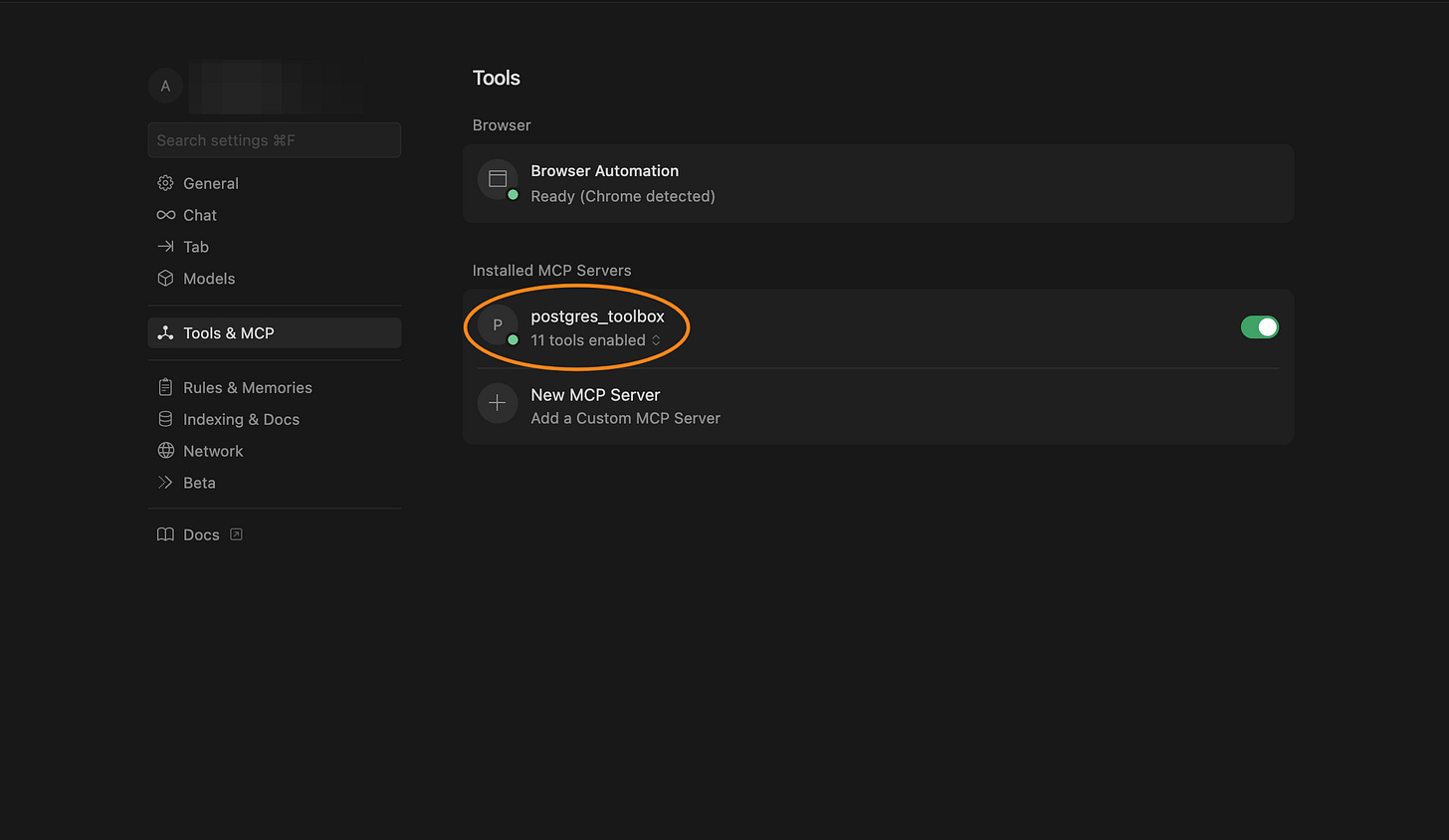

That’s it! If you see this, then your MCP server has been successfully set up

This is a quick preview of how you can call your tools from a chat:

By the way, here is the mcp.json configuration so you can easily copy and paste it:

{

“mcpServers”: {

“postgres_toolbox”: {

“command”: “./PATH/TO/toolbox”,

“args”: [”--prebuilt”, “postgres”, “--stdio”],

“env”: {

“POSTGRES_HOST”: “your-host”,

“POSTGRES_PORT”: “5432”,

“POSTGRES_DATABASE”: “your-database”,

“POSTGRES_USER”: “your-username”,

“POSTGRES_PASSWORD”: “your-password”

}

}

}

}Creating additional tools with tools.yaml

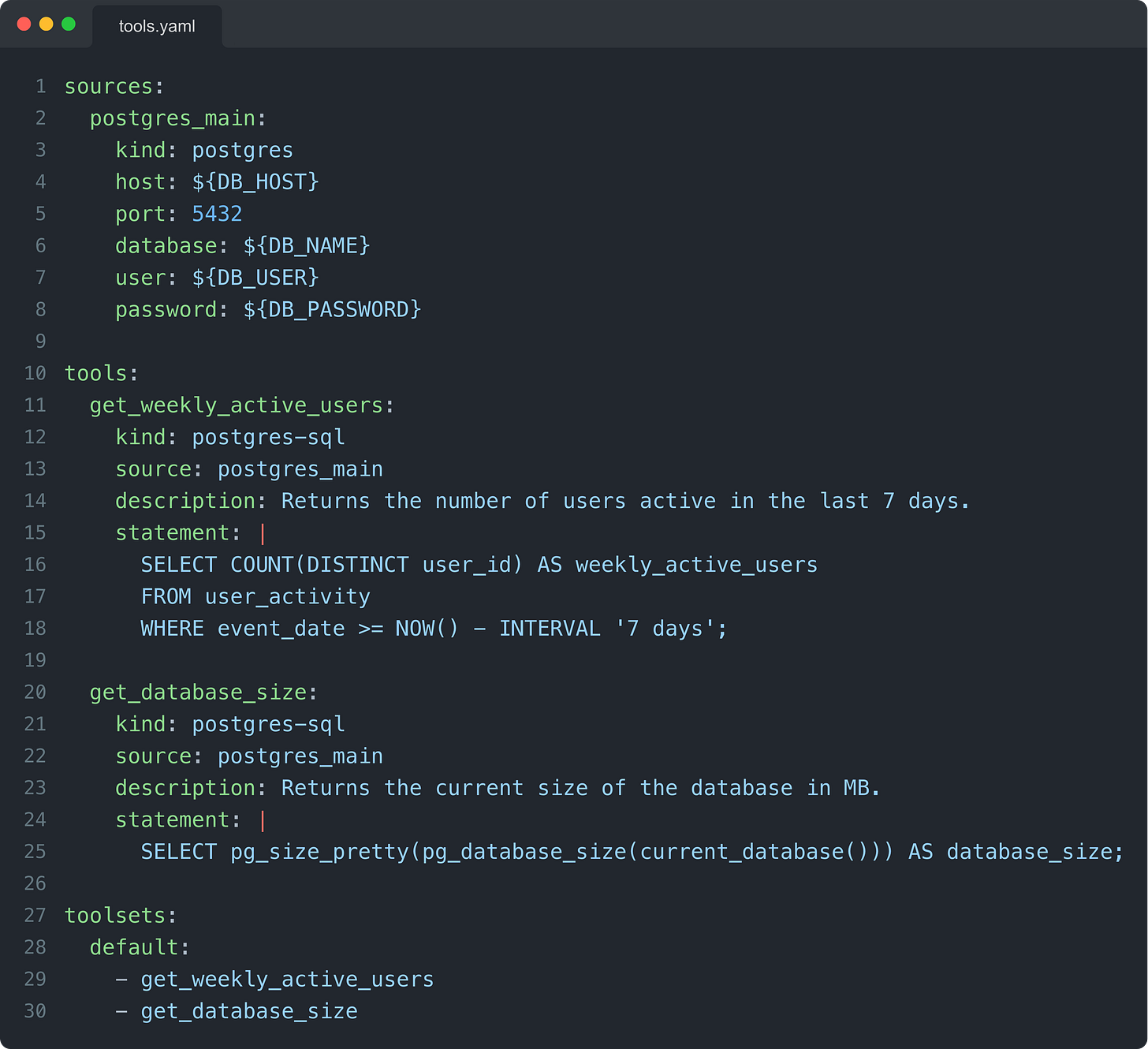

Now, let’s assume that you need tools that aren’t already provided by default. To do that, you can easily define your desired tools inside a tools.yaml file.

In this example, I create two tools based on common actions I want to create a shortcut for:

get_weekly_active_usersget_database_size

Now, if you wanted to connect this server to Cursor AI, this is what the mcp.json file would look like:

{

“mcpServers”: {

“postgres_toolbox”: {

“command”: “./PATH/TO/toolbox”,

“args”: [

“--stdio”,

“--tools-file”,

“./PATH/TO/tools.yaml”

],

“env”: {

“POSTGRES_HOST”: “your-host”,

“POSTGRES_PORT”: “5432”,

“POSTGRES_DATABASE”: “your-database”,

“POSTGRES_USER”: “your-username”,

“POSTGRES_PASSWORD”: “your-password”

}

}

}

}How am I using MCP servers in my workflow?

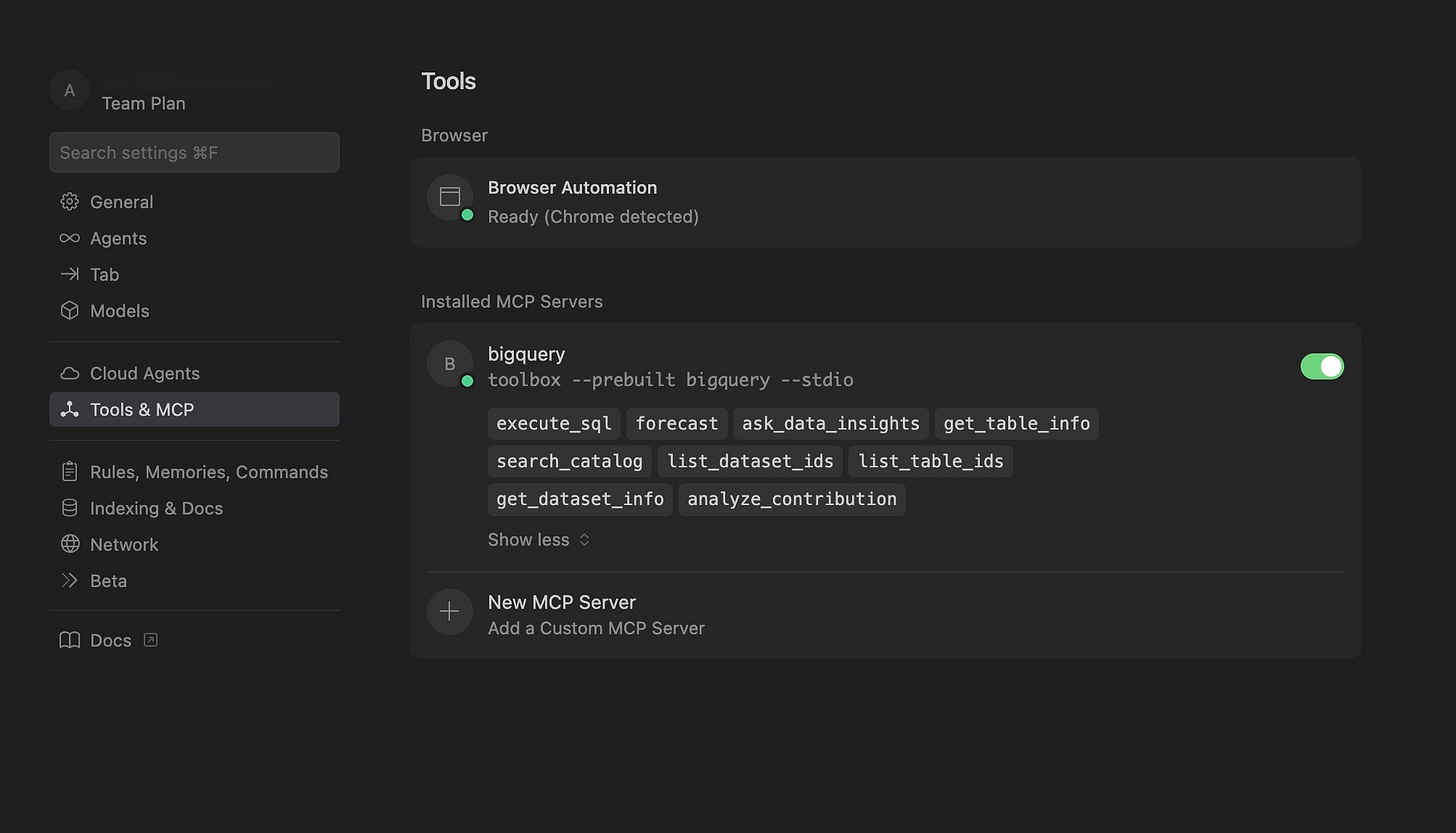

At work, I’ve been using the BigQuery MCP Server (built using the toolbox) to connect Cursor directly to my data.

Most of my coding happens in Cursor anyway, and so when I do data analysis, instead of switching tools to write my queries and bring in my data, I can now do everything I need inside Cursor.

I also recently added the Confluence MCP server, which is handy when I need quick access to documentation or notes while I’m exploring data.

It’s a small change in setup, but it’s made a big difference. Everything I need, from code to queries to context, now lives in one workspace.

A couple of other great resources:

🚀 Ready to take the next step? Build real AI workflows and sharpen the skills that keep data scientists ahead.

💼 Job searching? Applio helps your resume stand out and land more interviews.

🤖 Struggling to keep up with AI/ML? Neural Pulse is a 5-minute, human-curated newsletter delivering the best in AI, ML, and data science.

Thank you for reading! I hope this guide helps get the most out of your AI tools.

- Andres Vourakis

Before you go, please hit the like ❤️ button at the bottom of this email to help support me. It truly makes a difference!

That’s so cool Andres! I’ve been building custom ones but this seems to be very easily applicable for any Postgres db.

Sounds like they might also have a version for Claude Code.

Great article! I love MCPs and use them constantly, but you really have to be careful of how many you use at a time.

It happened to me more than once to have Sonnet call 4 servers one ofter the other as it was not able to find the correct information from the first try. It went like Context7 -> Exa -> Supabase -> Cloudflare.

Other than that they are EXTREMELY useful!